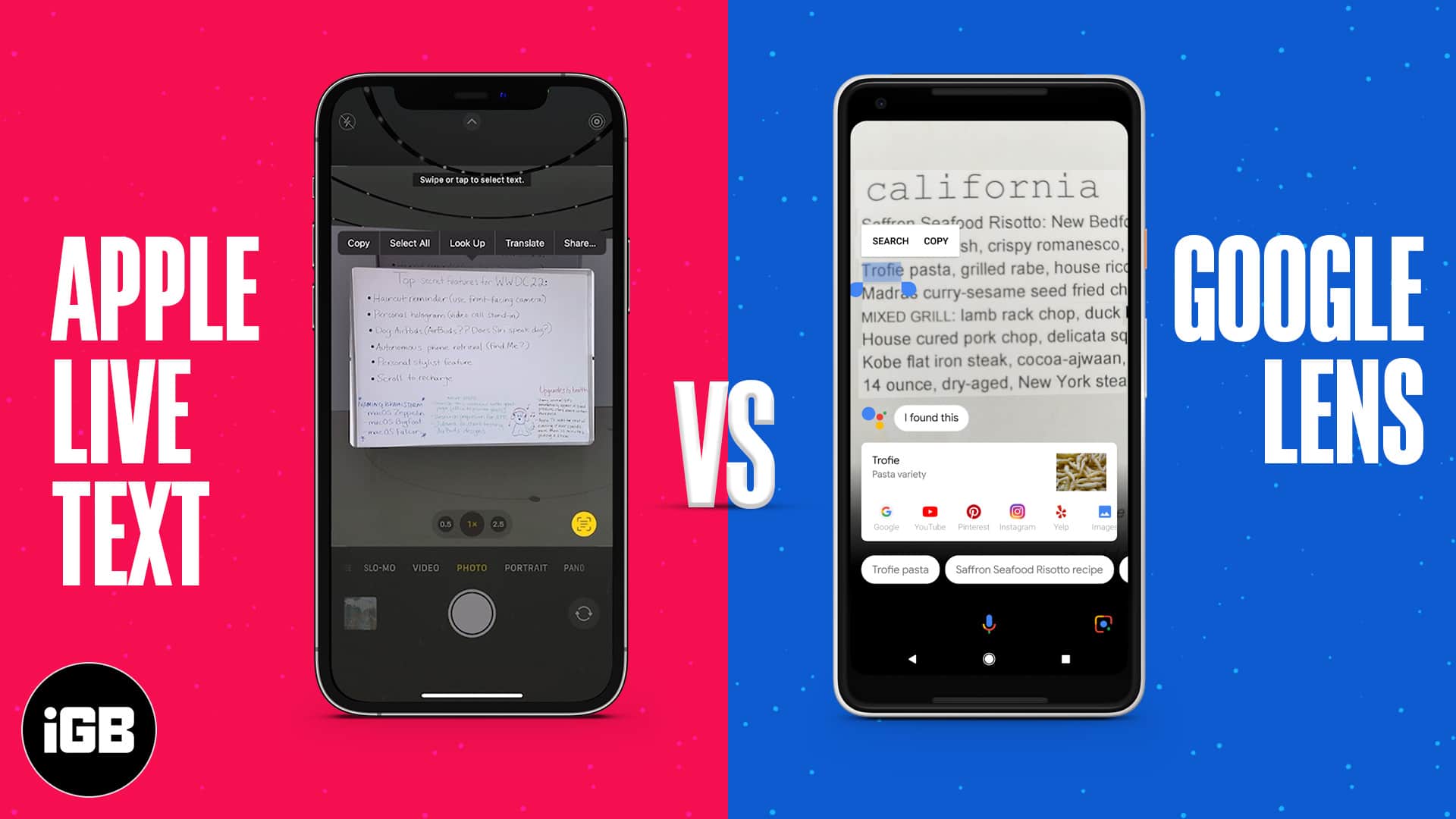

Ever wanted to copy text from an image or poster or look up its meaning or translation? Well, Apple’s new image-recognition feature, Live Text, makes it super easy. Moreover, it can also recognize objects, plants, animals, and monuments to make it easier to understand the world around you.

But how well does it actually work? I decided to compare Apple Live Text vs. Google Lens, which has similar capabilities and has been around for much longer. Let’s learn more about both and see how each performs.

- Live Text vs. Google Lens: Compatibility

- Live Text vs. Google Lens: Features

- Live Text vs. Google Lens: Text recognition

- Live Text vs. Google Lens: Translation

- Live Text vs. Google Lens: Visual Lookup

- Live Text vs. Google Lens: Ease of use

- Live Text vs. Google Lens: Accuracy

- Live Text vs. Google Lens: Privacy

Live Text vs. Google Lens: Compatibility

First off, Live Text is exclusively available across the Apple ecosystem, so it is compatible with:

- iPhones with the A12 Bionic chip and later running iOS 15

- iPad mini (5th generation) and later, iPad Air (2019, 3rd generation) and later, iPad (2020, 8th generation) and later, iPad Pro (2020) and later, running iPadOS 15

- Macs with the M1 chip running macOS Monterey

Google Lens, on the other hand, is available on both iOS and Android devices. This article compares iOS 15’s Live Text vs. Google Lens on the same iPhone 11.

Live Text vs. Google Lens: Features

Live Text is essentially Apple’s answer to Google Lens. So it offers many similar features. Let’s understand more about both.

What is iOS 15 Live Text?

Live Text in iOS 15 adds smart text and image recognition capabilities to your iPhone camera. You can use it to isolate text from images by just pointing your camera at the target material or recognizing text in images in your photo library. You can then look up the text online, copy it, translate it to a supported language, or share it

Live Text also includes Visual Lookup, which lets you find details about objects, animals, plants, and places by pointing your camera at them or from analyzing photos of them.

What is Google Lens?

Google Lens was launched in 2017 with app previews pre-installed into the Google Pixel 2. It later began rolling out as a stand-alone app for Android phones and is now integrated into the camera app on high-end Android devices.

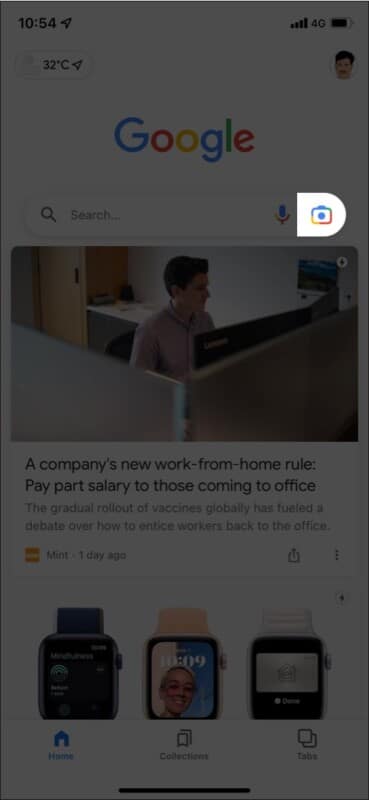

On iOS, Google Lens is available within the Google app. It offers text recognition, translation, object and place recognition, barcode and QR code scanning, and even help with homework questions for students.

Since it’s been around for a few years now, Google Lens is more developed and works a lot better than Apple Live Text at this time. I tested out and compared the main capabilities of both, and the results are explained below.

Live Text vs. Google Lens: Text recognition

After multiple rounds of testing with different kinds of text, I can conclude that Live Text is currently hit or a miss. It works sometimes, and other times it simply does not recognize text.

This is true whether you try to use it on an image of text or directly while pointing the camera at some text.

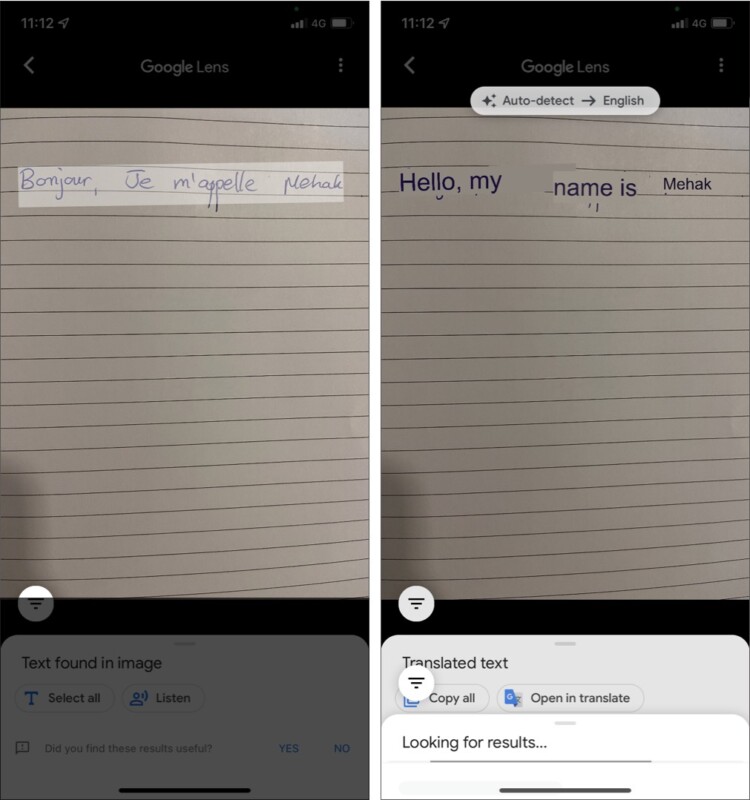

Moreover, handwriting recognition did not work at all. This is probably because iOS 15 is still in beta testing, so I’m looking forward to testing text recognition again once the official release is out.

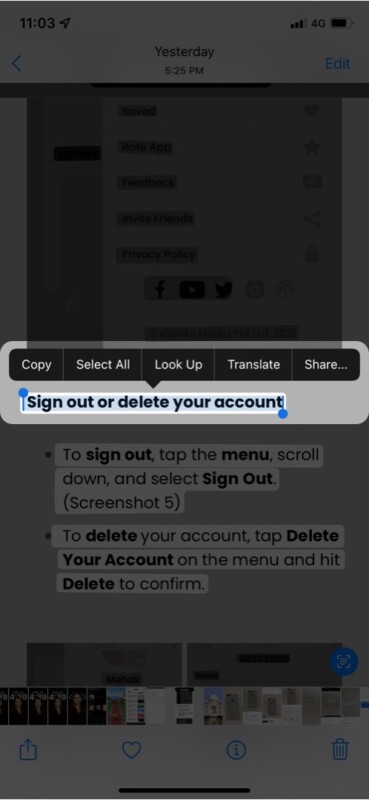

To use Live Text, tap the Live Text icon at the bottom right of an image. This icon only appears when the system has detected some text in the image.

To recognize text in the camera viewfinder, tap the generic area of text and then tap the yellow icon at the bottom right of the screen.

As mentioned above, currently, iOS 15 is not very good at recognizing the presence of text in images, so it works intermittently.

The few times that text recognition did work for me, I could select the text to see contextual actions that I could take. For instance, I could choose to Copy, Select All, Lookup, Translate, and Share text.

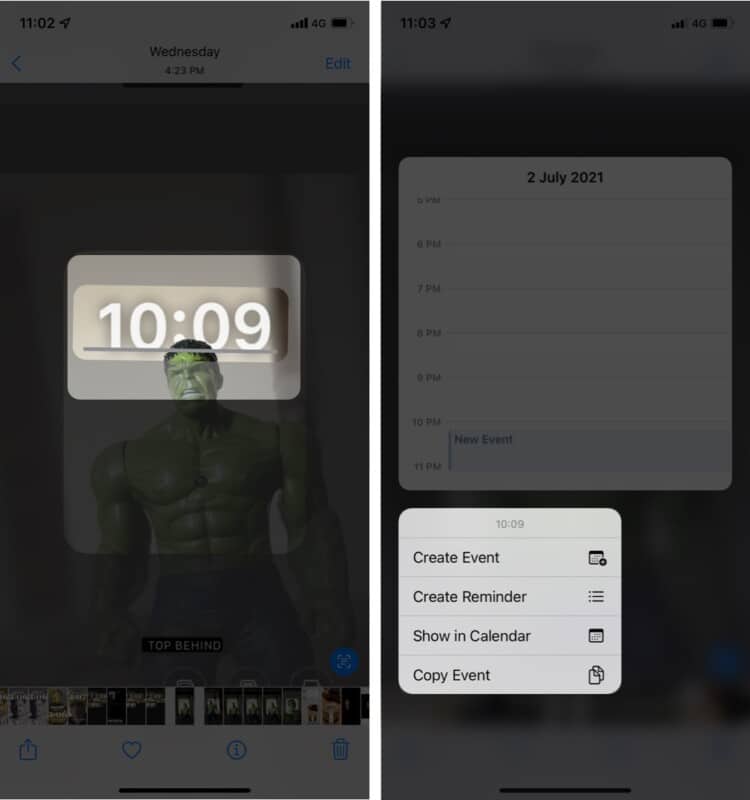

Or, when Live Text recognized a time, it offered the option to add a reminder to my calendar.

However, despite multiple attempts, Live Text did not recognize phone numbers or addresses.

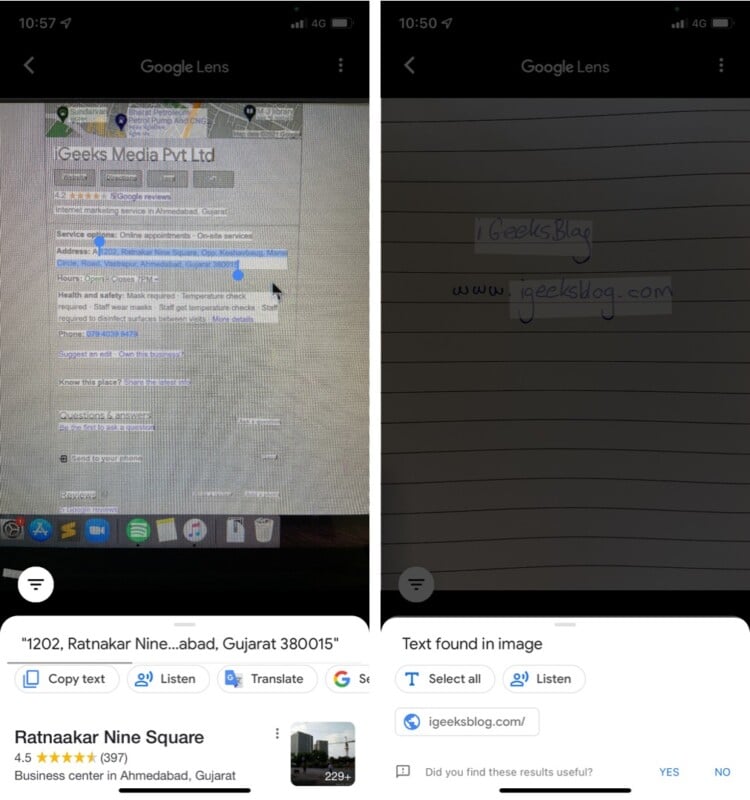

In comparison, Google Lens does an exceptional job with recognizing any kind of text, including handwriting. It also provided the relevant contextual actions for phone numbers, web addresses, street addresses, etc. So, at the moment it’s a clear winner and really convenient.

Live Text vs. Google Lens: Translation

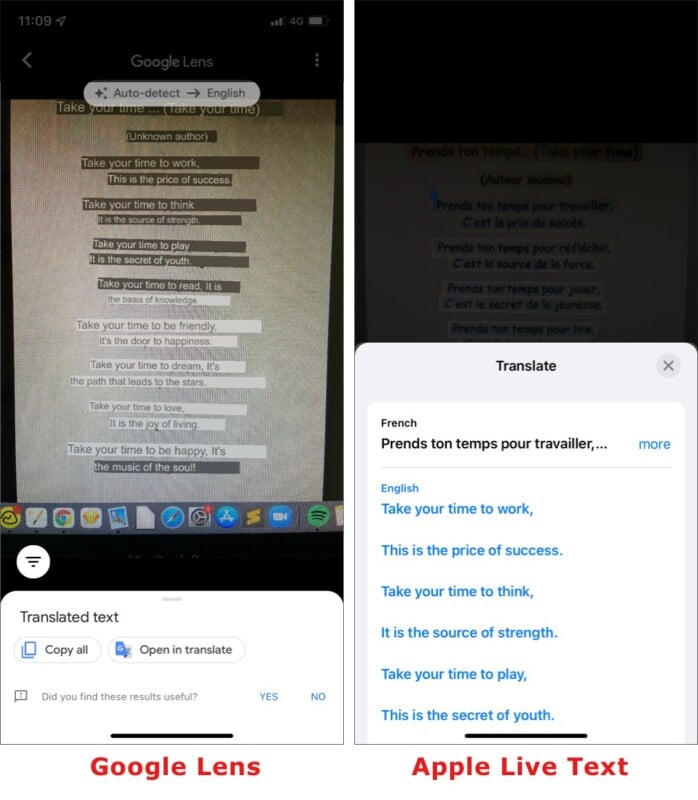

Apple Live Text is currently available in just seven languages, while Google Lens is available in all the 108 languages that Google Translate supports.

Google Lens puts the translation right onto the text in the image while Live Text gives it below the image.

Both seemed pretty accurate, but Google Lens has the added benefit of translating handwriting, which is pretty cool!

Live Text vs. Google Lens: Visual Lookup

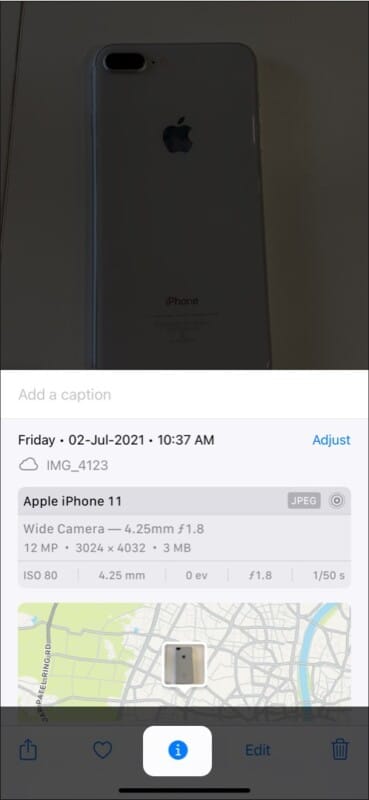

Visual Lookup is a feature that lets you recognize objects, monuments, plants, and animals in photos by tapping the small ‘i’ icon below the image.

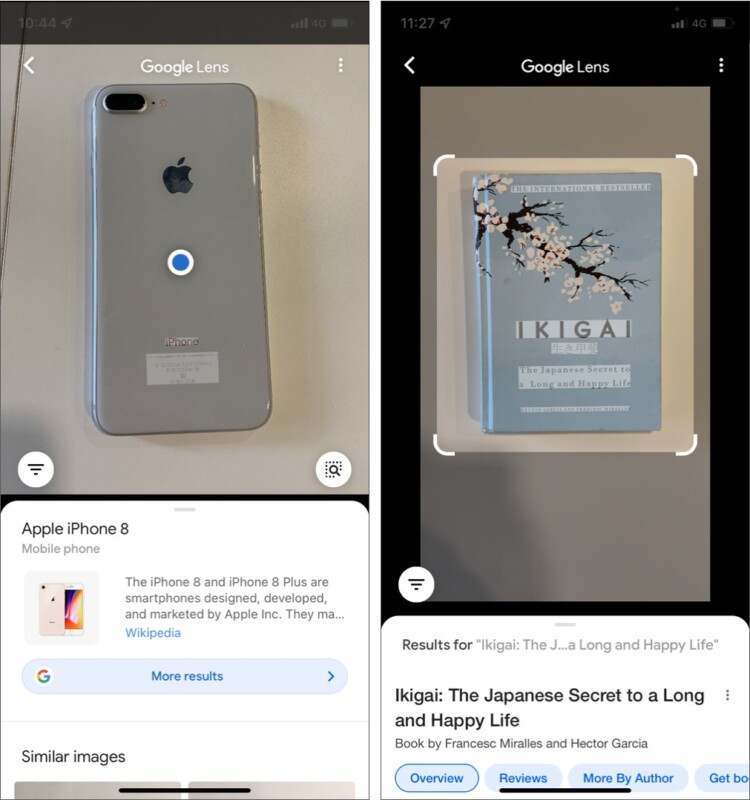

Identifying objects

Unfortunately, it seems like this feature doesn’t work in the iOS 15 beta version. I tried with various easily identifiable objects such as iPhones and books, but no results came up.

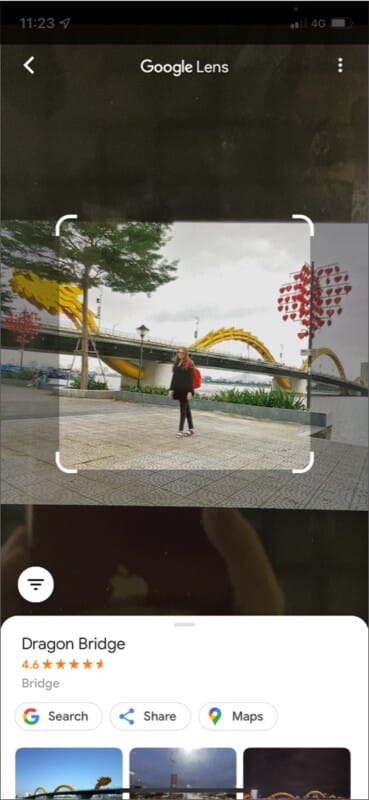

Google Lens had no problem identifying things, as shown in the screenshots below.

Identifying landmarks

Apple Live Text did not fetch any results when I tried to look up locations in photos.

Google Lens was pretty savvy at identifying landmarks like in this photo of me from Danang, Vietnam.

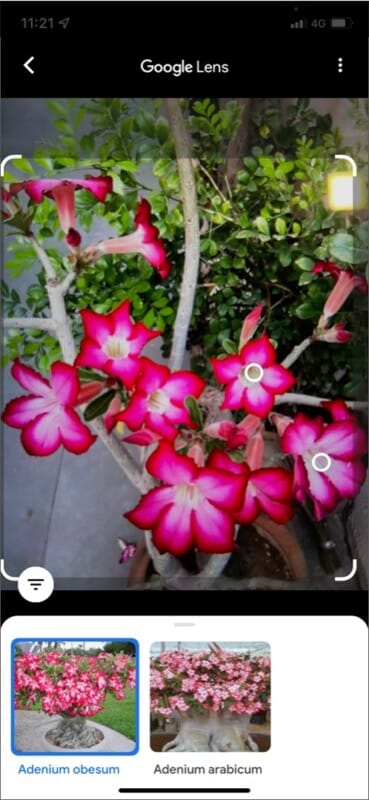

Identifying plants and flowers

This, too, did not work on Apple Live Text, but Google Lens could accurately identify flowers and plants.

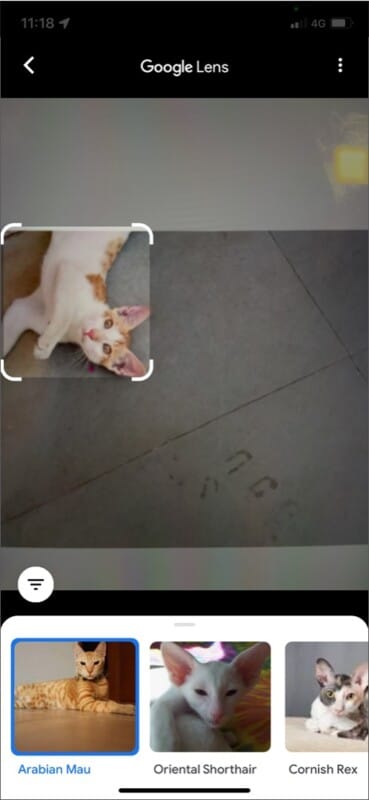

Identifying animals

Like all other Visual Lookup features on Live Text, this does not seem to function on iOS 15 beta. As for Google Lens, it could identify my cat but was not so clear about the breed.

Live Text vs. Google Lens: Ease of use

Although most Live Text features aren’t currently working as they should, one area where it wins is the ease of use. Its integration into the iPhone camera app makes it incredibly intuitive and handy. I’m sure it’s going to be a hit once the official version of iOS releases and Live Text functions to its full capacity.

In comparison, Google Lens is a bit clunky because to access it, you have to go into the Google app and tap the Google Lens icon. Then, you have to swipe to select the specific feature you want to use.

Live Text vs. Google Lens: Accuracy

At this time, Google Lens is far more accurate than Apple Live Text, whether it’s for recognizing text, handwriting, addresses, phone numbers, objects, places, and more. This is because Google has a lot more data and has had enough time to make Google Lens incredibly intelligent.

Nonetheless, I’m sure Live Text will see some significant improvements in the coming year after it’s officially released.

Live Text vs. Google Lens: Privacy

Google is notorious for tracking and using your data to personalize your experience, improve its services, further AI development, and more. So it goes without saying that data about what you lookup using Google Lens is stored.

Although I could not find much information about Live Text privacy, Apple prioritizes privacy, which will probably be built into Live Text. You won’t have to worry about your data being stored on a server or shared with third parties. Watch this space for more updates on this in the future after the official public launch of iOS 15.

Verdict: iOS 15’s Live Text vs. Google Lens – which is better?

That wraps up my comparison of image recognition tools from Google and Apple. Google Lens has been around for a couple of years, so it is more advanced and superior to Live Text when it comes to recognizing and translating text, looking up things and places online, and more.

However, it can’t beat Apple when we consider ease of use, integration with the Apple ecosystem, and of course, privacy. The convenience that Google Lens offers comes at the cost of your data. You can also use Google Lens on your Mac using Google Chrome, Safari or other browsers.

So, although Apple has some catching up to do when it comes to image recognition technology, Live Text is still a great in-built feature for Apple users that’s set to enhance the way you use your iPhone and other Apple devices. What do you think? Let me know in the comments below.

Read more: